University rankings overlook academic retractions: A postplagiarism perspective

Dec 13, 2024 — by Gengyan Tang. The original article was published on Postplagiarism.

Each year, when university rankings are released, many institutions proudly showcase their standings on their official websites—a routine display in higher education that rarely shifts. Critics often argue that these rankings are essentially “paid badges,” failing to reflect a school’s true academic reputation and research quality.

However, in the postplagiarism era, these criticisms need to expand to another overlooked issue: university rankings’ neglect of the rising number of retractions. Our recent study sheds light on the misuse of AIGC in publications and related plagiarism concerns—issues that may lead to more retractions across academia.

These retractions highlight crucial problems in research integrity, quality assurance, and institutional accountability, precisely the concerns emphasized in the postplagiarism era. Yet, ranking systems have largely ignored these factors, continuing to emphasize metrics like publication counts and citation rates as measures of success. These skewed metrics are then presented to consumers—students and parents—as guidance for their educational investments.

The Numbers Game: Flaws in University Ranking Systems

The basic mechanism of university rankings is a game of metrics. They design indicators, collect data, and then simplify it. For instance, research quality might be broken down into dimensions like paper count, citation counts, or average citations per researcher, each weighted in scoring the “research quality” metric.

Yet, as past reports have shown, such ranking methods have serious flaws. Take the example of Saveetha Dental College in Chennai, India, which made headlines for reportedly pressuring undergraduates to publish papers, thereby climbing up in rankings.

In the end, these quantified standards often become little more than a numbers game, manipulated by savvy players. However, besides metric manipulation, another critical blind spot persists: actual research quality.

The Big Retractions: Are Rankings Paying Attention?

Most university rankings heavily weigh “research quality” or “research strength,” often by counting papers published by a university’s researchers in high-impact journals or indexed databases.

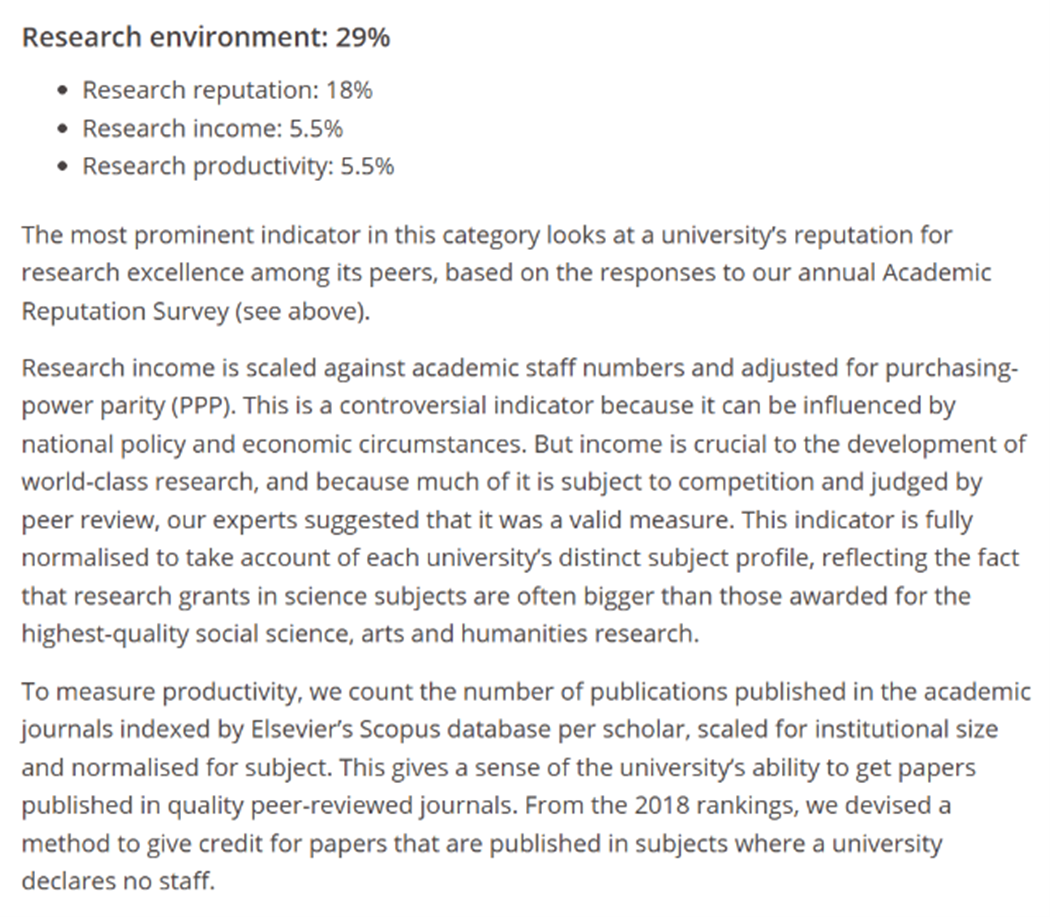

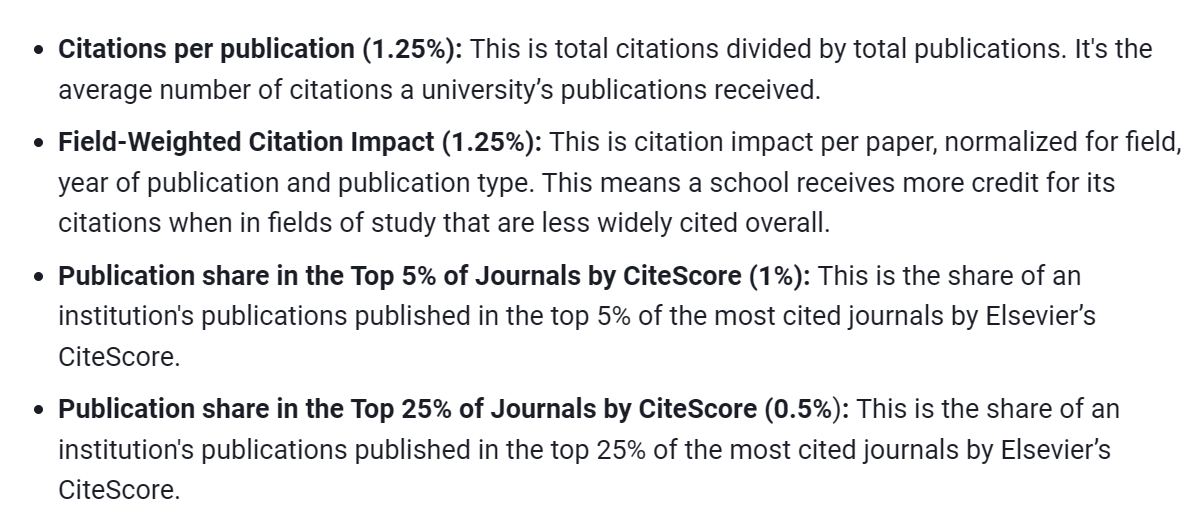

But with major retractions due to data falsification, plagiarism, or other research misconduct, there’s no clarity on whether these rankings account for such papers. The four major ranking systems—QS, US News, THE, and ShanghaiRanking—do not specify how they handle retractions within their metrics.

Figure 1. Explanation of THE’s Ranking Indicators

Figure 2. Explanation of US News’s Ranking Indicators

Figure 3. Explanation of QS’s Ranking Indicators

Figure 4. Explanation of Shanghai Ranking’s Ranking Indicators

Another tricky issue lies in timing: a paper might be published, counted, and then later retracted. Should rankings adjust their scores retroactively?

Such issues expose potential flaws in ranking methodologies, particularly regarding research integrity.

A Possible Solution

From both a principled and practical perspective, I can suggest some potential solutions:

At the principled level, the postplagiarism era underscores the importance of responsibility. Dr. Eaton notes, “Humans, not technology, are held responsible for the accuracy, validity, reliability, and trustworthiness of scientific and scholarly outputs.” This principle should apply to the way university rankings formulate their metrics:

-

Accuracy

University rankings must accurately account for retracted papers in their metrics. Only by doing so can they ensure fairness to institutions that are committed to maintaining a culture of research integrity. -

Validity

When university rankings tally publication counts, citation rates, and other research metrics, they must ensure that only valid papers are included. Articles retracted for plagiarism or falsification should be excluded from these counts. -

Reliability

University rankings should also consider the reliability of the research output they measure. Papers produced with an over-reliance on generative AI tools, resulting in substandard quality, should not contribute to an institution’s research score. -

Trustworthiness

University rankings need to demonstrate their commitment to research integrity in the postplagiarism era. By emphasizing this, they can establish themselves as trustworthy evaluators in higher education.

Furthermore, in the post-plagiarism era, Dr. Eaton suggests that traditional definitions of plagiarism may no longer apply. This perspective can also be extended to how we understand university rankings. In the post-plagiarism era, the definition of university rankings should incorporate the core values of research and academic integrity. This shift is necessary to ensure that ranking systems reflect not only the academic quality and reputation of an institution but also its commitment to research and academic integrity—providing parents and students with a more comprehensive basis for evaluation.

At the practical level, one solution for rankings could be to incorporate an “research integrity metric” or to adjust an institution’s research quality score based on its number of retractions. This could be framed as a penalty mechanism, for example, by subtracting retracted papers from the institution’s total publications for that year.

In short, university rankings must take research integrity into account, especially when assessing research strength or quality. Only by doing so can they fairly represent institutions committed to genuine, ethical research.

About the author: Gengyan Tang, MA, is a PhD student in the Werklund School of Education at the University of Calgary. His research interests include research integrity and academic integrity.